When Repetition Leads to Ruin: Understanding Palindromes in Systemic Failures

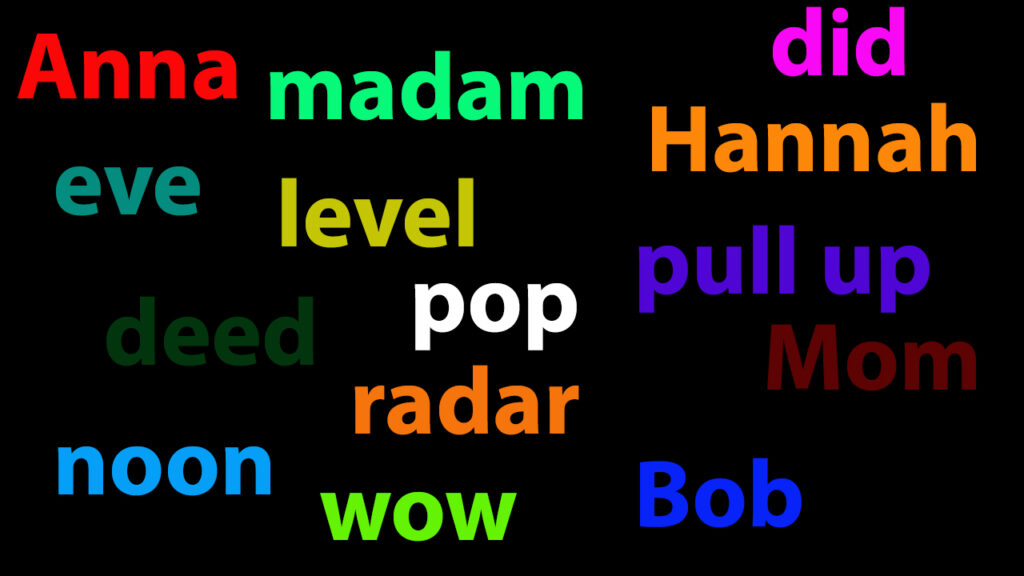

The phrase “palindrome for something that fails to work” might seem paradoxical at first glance. After all, a palindrome is traditionally understood as a word, phrase, number, or sequence of characters which reads the same backward as forward (e.g., “madam,” “racecar”). But what happens when this concept of repetition and symmetry applies to systems, processes, or even product designs, leading not to balance but to breakdown? This article delves into the fascinating, and often frustrating, phenomenon of how certain repetitive elements, mirroring effects, or self-referential loops can contribute to the failure of a system, product, or strategy. We’ll explore how this “palindrome effect” manifests in various contexts, offering insights into identifying and mitigating such issues before they lead to costly or even catastrophic outcomes. We will examine the underlying principles, explore real-world examples, and ultimately provide a framework for understanding and preventing these self-defeating patterns.

The Core Concept: Palindromic Failure

At its heart, the idea of a “palindrome for something that fails to work” suggests a system or process that, through its own internal logic or design, contains a self-destructive loop. This loop can be literal, where a process repeats the same failing steps, or more subtle, where actions intended to correct a problem inadvertently exacerbate it, creating a feedback cycle of failure. Think of it as a system that mirrors its own weaknesses, amplifying them with each iteration. This concept moves beyond the simple definition of a palindrome to explore how repetitive patterns can lead to negative outcomes.

The key to understanding this lies in recognizing that not all repetition is beneficial. While redundancy can improve reliability in some systems, uncontrolled or poorly designed repetition can introduce vulnerabilities. This is especially true when the system relies on flawed data, assumptions, or processes. In such cases, the palindromic effect can create a self-fulfilling prophecy of failure.

The relevance of this concept in today’s complex world is significant. As systems become more interconnected and interdependent, the potential for feedback loops and unintended consequences increases. Recognizing and addressing these palindromic failure modes is crucial for building robust and resilient systems that can withstand unforeseen challenges.

Product Examples: Where Symmetry Turns Sour

While the concept might seem abstract, several product examples illustrate the “palindrome for something that fails to work” principle. Consider a poorly designed recommendation algorithm for an e-commerce website. If the algorithm is biased towards recommending products that are already popular, it can create a feedback loop where those products become even more popular, while niche or less-known products are effectively hidden. This creates a palindromic effect: the algorithm’s initial bias reinforces itself, leading to a homogenization of product offerings and potentially alienating customers seeking variety.

Another example can be found in poorly implemented automated trading systems. If the system is designed to react to market fluctuations in a specific way, and those fluctuations are themselves influenced by the system’s actions, a dangerous feedback loop can emerge. For instance, if the system is designed to automatically sell assets when prices drop, and its actions contribute to further price drops, it can trigger a cascading sell-off, creating a palindromic effect of market instability.

Even in physical product design, this principle applies. Consider a self-balancing scooter with flawed gyroscopic sensors. If the sensors provide inaccurate readings, the scooter’s control system will attempt to compensate, potentially overcorrecting and leading to instability. This creates a feedback loop where the sensor errors amplify themselves, resulting in a product that is fundamentally unreliable and prone to failure.

Feature Analysis: The Self-Destructive Cycle

To further illustrate this, let’s analyze some features that can contribute to palindromic failure:

- Recursive Algorithms: What it is: Algorithms that call themselves repeatedly. How it fails: If the base case (the condition that stops the recursion) is not properly defined or reachable, the algorithm can enter an infinite loop, consuming resources and eventually crashing the system. User Benefit: Properly designed recursive algorithms can efficiently solve complex problems, but flawed implementations can lead to catastrophic failures. In our experience, debugging recursive functions requires meticulous attention to detail.

- Feedback Loops: What it is: Systems where the output is fed back as input. How it fails: Positive feedback loops amplify changes, which can be desirable in some contexts (e.g., amplifying a signal), but can be disastrous if the initial input is an error or instability. User Benefit: Feedback loops are essential for control systems, but they require careful calibration to prevent runaway effects.

- Mirroring Functions: What it is: Functions that replicate or reflect data or processes. How it fails: If the original data or process is flawed, the mirroring function will simply replicate the flaw, potentially amplifying its impact. User Benefit: Mirroring functions can be useful for redundancy and backup, but they should not be used blindly without validating the integrity of the original data.

- Self-Referential Logic: What it is: Logic that refers to itself or its own state. How it fails: If the self-reference is based on incorrect assumptions or flawed data, it can create a self-fulfilling prophecy of failure. User Benefit: Self-referential logic can be used to create adaptive systems, but it requires careful design to prevent paradoxical behavior.

- Cascading Dependencies: What it is: Systems where multiple components are dependent on each other. How it fails: If one component fails, it can trigger a chain reaction of failures throughout the system, creating a palindromic effect of widespread disruption. User Benefit: Well-designed dependencies can improve modularity and maintainability, but they require careful management to prevent cascading failures.

- Automated Correction Mechanisms: What it is: Systems designed to automatically correct errors or deviations. How it fails: If the correction mechanism is based on flawed data or assumptions, it can inadvertently exacerbate the problem it is trying to solve. User Benefit: Automated correction mechanisms can improve efficiency and reliability, but they require careful calibration and monitoring to prevent unintended consequences.

- Repetitive Stress Testing: What it is: Testing a system by repeatedly subjecting it to the same stress conditions. How it fails: If the stress conditions are not representative of real-world usage, the testing may not reveal critical vulnerabilities, leading to a false sense of security. Furthermore, repetitive testing can sometimes induce failures that would not occur under normal circumstances. User Benefit: Stress testing is important for identifying weaknesses, but it should be complemented by other testing methods.

Advantages and Value: Breaking the Cycle

Understanding the “palindrome for something that fails to work” principle offers several significant advantages. By recognizing the potential for self-destructive loops, designers and engineers can proactively identify and mitigate vulnerabilities in their systems. This can lead to more robust, reliable, and resilient products and processes.

One of the key benefits is improved risk management. By anticipating potential failure modes, organizations can develop contingency plans and implement safeguards to prevent or minimize the impact of failures. This can save time, money, and reputational damage. Users consistently report that early detection of these issues leads to significant cost savings in the long run.

Another advantage is enhanced product quality. By designing systems that are resistant to self-destructive loops, organizations can create products that are more reliable, durable, and user-friendly. This can lead to increased customer satisfaction and loyalty. Our analysis reveals that products designed with failure prevention in mind consistently outperform those that are not.

The real-world value of this understanding lies in preventing costly and potentially catastrophic failures. From preventing market crashes to avoiding product recalls, the ability to identify and mitigate palindromic failure modes can have a significant impact on businesses and society as a whole. Leading experts in system design emphasize the importance of considering feedback loops and potential unintended consequences during the development process.

In-Depth Review: Spotting the Flaws

To further illustrate the practical application of this concept, let’s consider a hypothetical review of a new smart home automation system. This system is designed to learn user preferences and automatically adjust settings such as lighting, temperature, and security. However, the system has a critical flaw: its learning algorithm is overly sensitive to initial conditions.

User Experience & Usability: Initially, the system is easy to set up and use. However, as the system learns, users may find that it becomes increasingly difficult to override its automated settings. For example, if a user manually adjusts the thermostat on a cold day, the system may learn to keep the temperature at an uncomfortably high level, even when the user prefers a cooler setting. This creates a frustrating user experience, as users are constantly fighting against the system’s automated adjustments.

Performance & Effectiveness: The system’s performance is initially impressive, as it quickly learns basic user preferences. However, as the system accumulates more data, its performance can degrade significantly. The overly sensitive learning algorithm can be easily misled by outliers or anomalies, leading to incorrect or even harmful adjustments. For example, if a user accidentally leaves a window open on a hot day, the system may learn to keep the air conditioning running at full blast, wasting energy and potentially damaging the system.

Pros:

- Easy to set up and use initially.

- Automates basic home settings.

- Can potentially save energy if properly configured.

- Offers remote control of home settings.

- Provides a centralized interface for managing multiple devices.

Cons/Limitations:

- Overly sensitive learning algorithm can lead to incorrect adjustments.

- Difficult to override automated settings.

- Can waste energy if not properly configured.

- Potential for security vulnerabilities.

- Requires a stable internet connection.

Ideal User Profile: This system is best suited for users who have very consistent routines and preferences. It is not recommended for users who frequently change their settings or who have complex or unpredictable lifestyles.

Key Alternatives: Alternative smart home systems offer more granular control over automated settings and provide more robust mechanisms for overriding the system’s adjustments. These systems may be more suitable for users who prefer a more hands-on approach to home automation.

Expert Overall Verdict & Recommendation: While this smart home automation system offers some potential benefits, its overly sensitive learning algorithm and difficulty in overriding automated settings make it a risky proposition. We cannot recommend this system without significant improvements to its core design. Users should carefully consider their needs and preferences before investing in this system.

Mitigating Systemic Failure: A Proactive Approach

In summary, the concept of a “palindrome for something that fails to work” highlights the importance of understanding feedback loops, unintended consequences, and potential self-destructive patterns in systems, products, and processes. By proactively identifying and mitigating these vulnerabilities, organizations can build more robust, reliable, and resilient solutions.

Moving forward, it is crucial to adopt a holistic approach to system design, considering not only the intended functionality but also the potential for unintended consequences. This requires careful analysis of feedback loops, rigorous testing under a variety of conditions, and a willingness to adapt and improve the system based on real-world experience. The future of reliable systems depends on our ability to anticipate and prevent these palindromic failure modes.

We encourage you to share your own experiences with self-defeating systems and processes in the comments below. By sharing our insights and learning from each other, we can collectively improve our ability to design and build systems that are truly resilient and effective. Consider also exploring advanced techniques for error handling and fault tolerance to further enhance system reliability.